What is GPT-3?

Episode 12: A very brief intro to Machine Learning, Supervised & Unsupervised Learning, and GPT-3

Machine learning sounds complicated and scary — like something only PHDs can understand. But while advanced machine learning models are generally only created by PHDs, anyone can understand the basics.

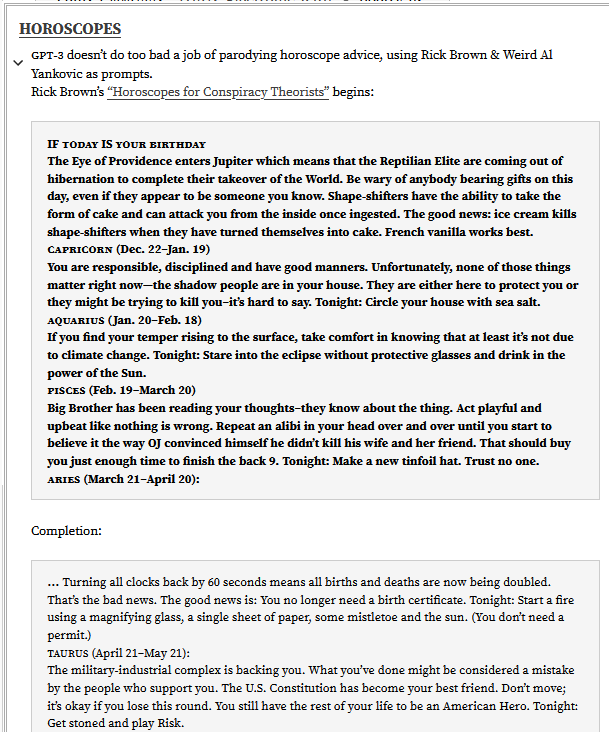

From my experience this is comic is very accurate.

Justin Gage, a data science major, does a good job explaining how machine learning is all about relationships here:

“Now, I majored in Data Science and I still get confused about this, so it’s worth a basic refresher. Machine Learning is just about figuring out relationships – what’s the impact of something on another thing? This is pretty straightforward when you’re tackling structured problems – like predicting housing pricing based on the number of bedrooms – but gets kind of confusing when you move into the realm of language and text.”

I didn’t major in data science but I’m going to try to explain the basics of machine learning + why GPT-3 (a new machine learning model) is so cool and groundbreaking.

Hopefully you make it to the end to see the funny examples created by GPT-3!

Machine Learning vs Computer Programming

Computer programming is the process of designing a program to allow a computer to perform a specific task. Machine learning, on the other hand, is used to train computers to accomplish tasks they are not specifically engineered to do.

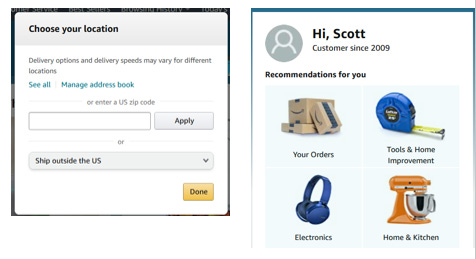

For example, programming would take Shipping Details inputted on Amazon.com and update them in Amazon’s databases — given a specific input, the output is always the same. Machine learning would take previous Amazon purchases and recommend new products — given an input the output can change.

In traditional Computer Programming someone needs to program the logic that turns the input data into the output data.

For Machine Learning, the input data and output are fed to an algorithm that creates a program.

So it boils down to who creates the logic or rules for the program — a programmer or algorithm.

Two Types of Models: Supervised vs Unsupervised Learning

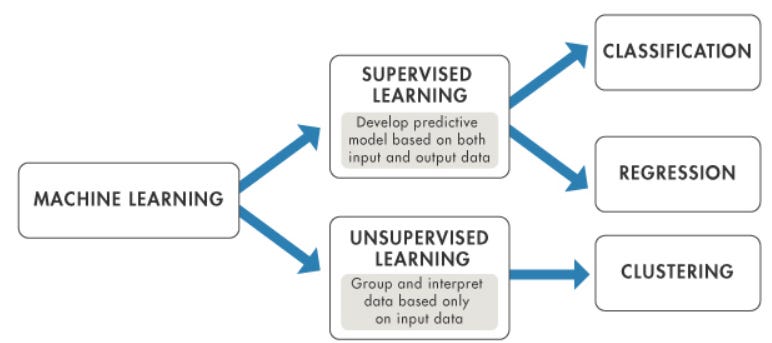

For our purposes we’ll focus on two types of models (supervised and unsupervised), then talk about a cool new unsupervised model called GPT-3. (it’s cool to some people)

In a supervised learning model, the algorithm learns using a labeled data set that has answers, which the algorithm then uses to determine accuracy. An unsupervised model, is only given input data which the algorithm uses to find patterns.

Supervised Learning

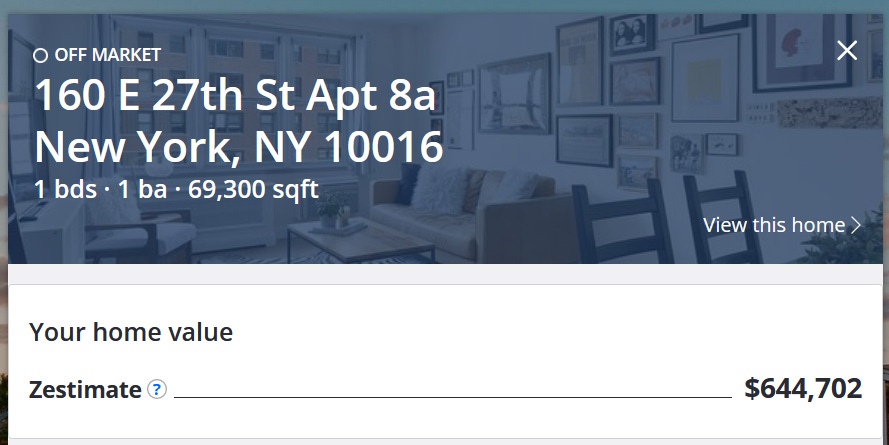

A clear example of supervised learning is the Zestimate. Zillow uses features of apartments like location, # of bedrooms/bathrooms to predict home value.

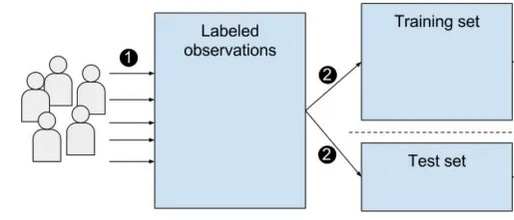

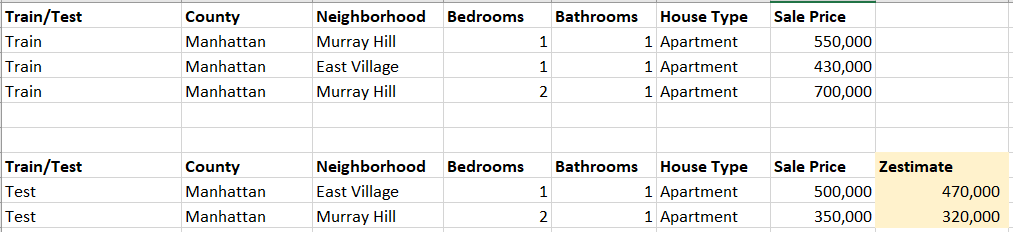

To do this, a data scientist would separate the data into a Training Set with the answers (which in this case would be the Sale Price), as well as a Test Set to see if the algorithm created using the Training Set is accurate.

In the below example, the top three rows of data (apartments) are used to determine how much weight to give to each input (features like bedrooms/bathrooms). This is then tested on two new apartments. The model output (Zestimate) is compared to the training set sale prices to determine accuracy of the model.

This is a very simplified version of the Zestimate. As the Zillow CEO says,

“Just to give a context, when we launched, we built 34 thousand statistical models every month. Today, we update the Zestimate every single night and generate somewhere between 7 and 11 million statistical models every single night.”

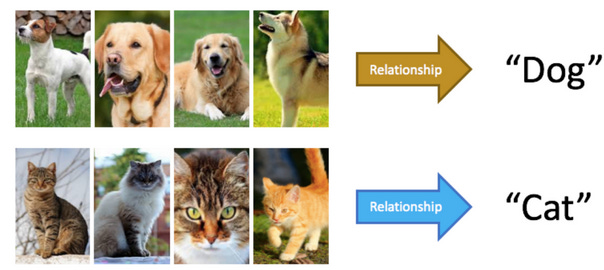

There are two main areas where supervised learning is useful: classification problems and regression problems.

Classification problems use an algorithm to predict a member of a particular group. For example, in a training data set of dog or cat pictures could determine the whether there a given picture will be a Dog or a Cat given the features of the photos.

Regression problems predict continuous data like the Zestimate — the price of an apartment in Brooklyn based on square footage, location and proximity to public transport.

The key with supervised learning is that the algorithm is trained on specific outcomes, then tested to validate accuracy.

Model training is a great time to slack off…

Unsupervised Learning

Imagine you’re given the pieces to an Ikea chair but not given instructions or even an idea of what to build. This is unsupervised learning.

The model is handed a data set without explicit instructions on what to do with it. The algorithm attempts to find an outcome or correct answer by looking for patterns or grouping.

Here’s three examples of the main ways to use unsupervised learning according to Nvidia:

Clustering: look at a collection of bird photos and separate them roughly by species, relying on cues like feather color, size or beak shape.

Anomaly detection: Banks detect fraudulent transactions by looking for unusual patterns in customer’s purchasing behavior. For instance, if the same credit card is used in California and Denmark within the same day, that’s cause for suspicion.

Association: Fill an online shopping cart with diapers, applesauce and sippy cups and the site just may recommend that you add a bib and a baby monitor to your order. This is an example of association, where certain features of a data sample correlate with other features.

As you can imagine, there’s also semi-supervised learning, which is a mixture of labelled and unlabelled data. A trained radiologist could go through some CT scans or MRIs to label certain subsets for tumors. The algorithm can use that to better understand the goal or output (finding tumors in the unlabelled data).

GPT-3: Unsupervised Learning on Steroids

GPT-3 is a MASSIVE unsupervised learning model. Like 175 billion data points massive.

Input to the model (the unlabelled data): Million web pages from sources like Common Crawl, Wikipedia, and some additional books (Common Crawl is a database that archives millions of public pages around the web).

Output from the model:

GPT-3 is a neural-network-powered language model. A language model is a model that predicts the likelihood of a sentence existing in the world. For example, a language model can label the sentence: “I take my dog for a walk” as more probable to exist (i.e. on the internet) than the sentence: “I take my banana for a walk.”

So in essence GPT-3 takes a lot of articles, books, etc and tries to predict the next word in a sentence to figure out what ‘good’ sentences look like.

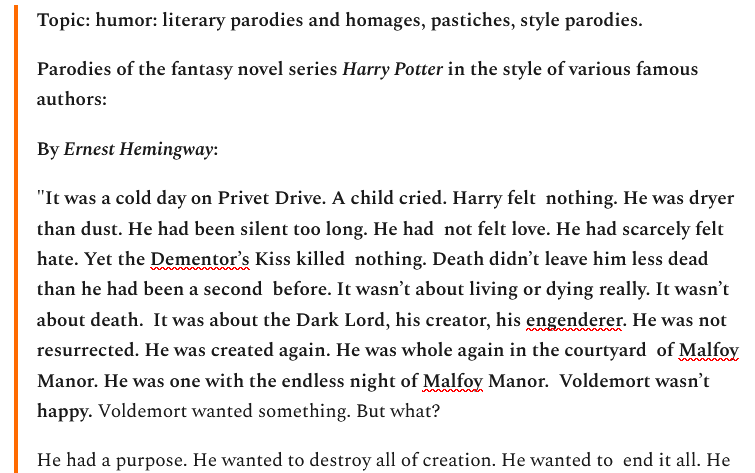

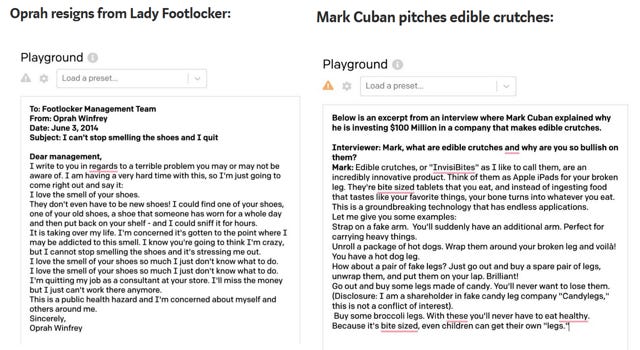

So what does this look like? There are tons of examples on the internet 1, 2 and 3 are good examples. But the below excerpt is really all you need to understand what’s happening…

The author typed in the bold lines below as input and GPT-3 outputted the rest (it actually goes on much longer but I cut it off, because you get the point)

Topic: humor: literary parodies and homages, pastiches, style parodies.

Parodies of the fantasy novel series Harry Potter in the style of various famous authors:

By Ernest Hemingway:

"It was a cold day on Privet Drive. A child cried. Harry felt nothing. He was dryer than dust. He had been silent too long. He had not felt love. He had scarcely felt hate. Yet the Dementor’s Kiss killed nothing. Death didn’t leave him less dead than he had been a second before. It wasn’t about living or dying really. It wasn’t about death. It was about the Dark Lord, his creator, his engenderer. He was not resurrected. He was created again. He was whole again in the courtyard of Malfoy Manor. He was one with the endless night of Malfoy Manor. Voldemort wasn’t happy. Voldemort wanted something. But what?

He had a purpose. He wanted to destroy all of creation. He wanted to end it all. He could have that. He would have that. He didn’t know yet that he could have it. Voldemort had created Harry. Voldemort had never really destroyed Harry. Harry would always be there, a vampire, a parasite, a monster in the kitchen, a drain on the household, a waste on the planet. Harry would never be real. That was what Voldemort wanted. That was what Voldemort wanted to feel. He would have that. He would have everything.

He had a purpose. He was working on something. He had no idea what it was. He was a perfect tool. He was born to be used. He was no one at all. Harry didn’t exist. The little boy with the lightning bolt scar didn’t exist. The boy who had lived did not exist. He never had lived. He never would have lived. He was dead. He had been dead.

Voldemort wanted Harry dead. Voldemort wanted Harry dead so he could be born again. Voldemort was God.

Harry was God.

Voldemort was God.

And Harry, the god of Death, was not going to allow that to happen.

Not yet.

Not ever.

The lightning bolt scar burned.

The boy ran for his life. He knew that he would die. He was alone. His best friends were dead. He knew that he would die. He knew that he was a waste. He was nothing at all.

He had no past. He had no future. He was dead.

The algorithm took the input (continue the story about Harry Potter in the style of Ernest Hemingway) and created a completely new story from scratch. There was no labeling of the data to tell it which were good sentences, what Ernest Hemingway’s style looks like, or even that it should complete a story.

You can see the limitations of the model, though, because while it gets the style of Hemingway and the Harry Potter characters, there are points where the story doesn’t fully make sense (especially for longer outputs).

Why is this important?

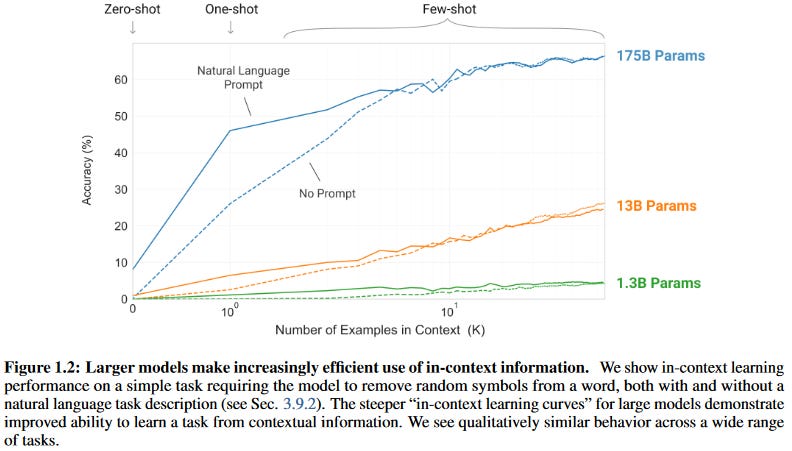

OpenAI, the Elon Musk-backed organization that created GPT-3, used two methods to increase the models accuracy:

- Lots of data

45,000 GB of web articles, books, etc

2x magnitude increase from GPT-2, and 10x the next best — Microsoft's Turing NLG

- More context/input for the model to understand the prompt

Called ‘few-shot’ this means the model is given a long contextual prompt

Notice the Topic label, the description of the style, as well as the beginning of the story in the Harry Potter/Hemingway mashup…

The below chart from GPT-3’s paper illustrates the increase in accuracy from more data (Parameters) and more examples in context (few-shot).

The big revelation from GPT-3 is that we (not me or you, but really smart data scientists) can brute force an accurate unsupervised model just by feeding it lots (and lots and lots) of data and giving it some context about what you want it to do.

Up to this point there was discussion of an ‘AI Winter’ or thinking that the current models were dead ends to near human level intelligence. Yet, data scientists are now getting closer to creating Artificial Intelligence mainly using lots of data, not necessarily advancing the complexity or structure of the models.

GPT-3 has generated a lot of discussion on Twitter, Reddit, and Hacker News. One post compares the human brain to language models:

A typical human brain has over 100 trillion synapses, which is another three orders of magnitudes larger than the GPT-3 175B model. Given it takes OpenAI just about a year and a quarter to increase their GPT model capacity by two orders of magnitude from 1.5B to 175B, having models with trillions of weight suddenly looks promising.

The ability of the models like this to imitate humans is getting scary.

Due to it's power and ease of misuse, OpenAI has made GPT-3 available as a private beta for which people can sign up on a wait-list.

It’s clear how interesting and useful it could be once the downsides are addressed. And who knows what’s possible with another 10x increase in the amount of training data. GPT-4 could build a social media following all on its own tweeting Harry Potter/Hemingway mash-ups… no need to be an #Influencer.

Instead of the Book/Article/Movie recommendations I’ll give some funny examples related to GPT-3… (bold parts are the prompt)

If you want to spend an hour going through creative GPT-3 examples like the one below check out this site: